Mozilla could probably backwards engineer the protocol, but this could have some negative consequences.

#Chromecast plugin for firefox mac full#

It is in Google's interest to keep full control and that involves making phone apps rely on their module and the same on desktop apps.

#Chromecast plugin for firefox mac android#

I know applications on Android do it through a Java library and SDK that is non-trivial to port to the desktop.īased on the downvotes I guess I should have pasted the original comment. I never attempted to replicate chrome.tabCapture or sktopCapture, but that'd be possible given enough time and someone more skilled with reverse engineering protocols.Įdit: I haven't looked into how VLC casts videos, but I should take a look. However, you could use it to start, cancel, play, pause, and adjust volume.

Even so, I never really reverse-engineered much beyond YouTube due to lack of time. Firefox's desktop APIs wouldn't let me open a UDP socket, so I had to implement it via Node app. The issue back then was creating a self-contained executable with mDNS and a Castv2 implementation that Firefox could start. I wrote a simple Node app running a WebSocket and the extension would imitate the Chrome extensions interface and send messages to the WebSocket which the executable would send via the Castv2 protocol to the Chromecast. Folks have been asking but I've been continually swamped with projects. I have an old codebase I have yet to release due to bitrot. The internals probably aren't stable enough to rely on anyway. It could run in a headless Chromium instance (I tried NW.js), but there's no simple workaround for triggering the casting programmatically, without the destination dialog. Getting it to run within Firefox isn't feasible. The cast extension uses a bunch of internal chrome.* APIs. It'd be great to get the chrome.tabCapture / sktopCapture extension APIs that the cast extension uses. Custom receiver could also work, but something I've been trying to avoid. WebRTC in the native application isn't as simple as you'd think unless you use an embedded browser.Īnother option is making a direct WebRTC connection from the browser to the Chromecast and trying to hook into the receiver Chrome uses for tab sharing. It would mean transforming a WebRTC stream into a live stream that the default receiver could play (HLS/DASH). The main problem is getting that to the Chromecast. Tab audio capture doesn't work and it's buggy (causes crashes), system audio capture isn't possible in the browser but could be done in the native application (could be sync issues, not sure). Screen can be captured via WebRTC, the tab would need to be drawn to a canvas ( drawWindow() / tabs.captureTab()) first.

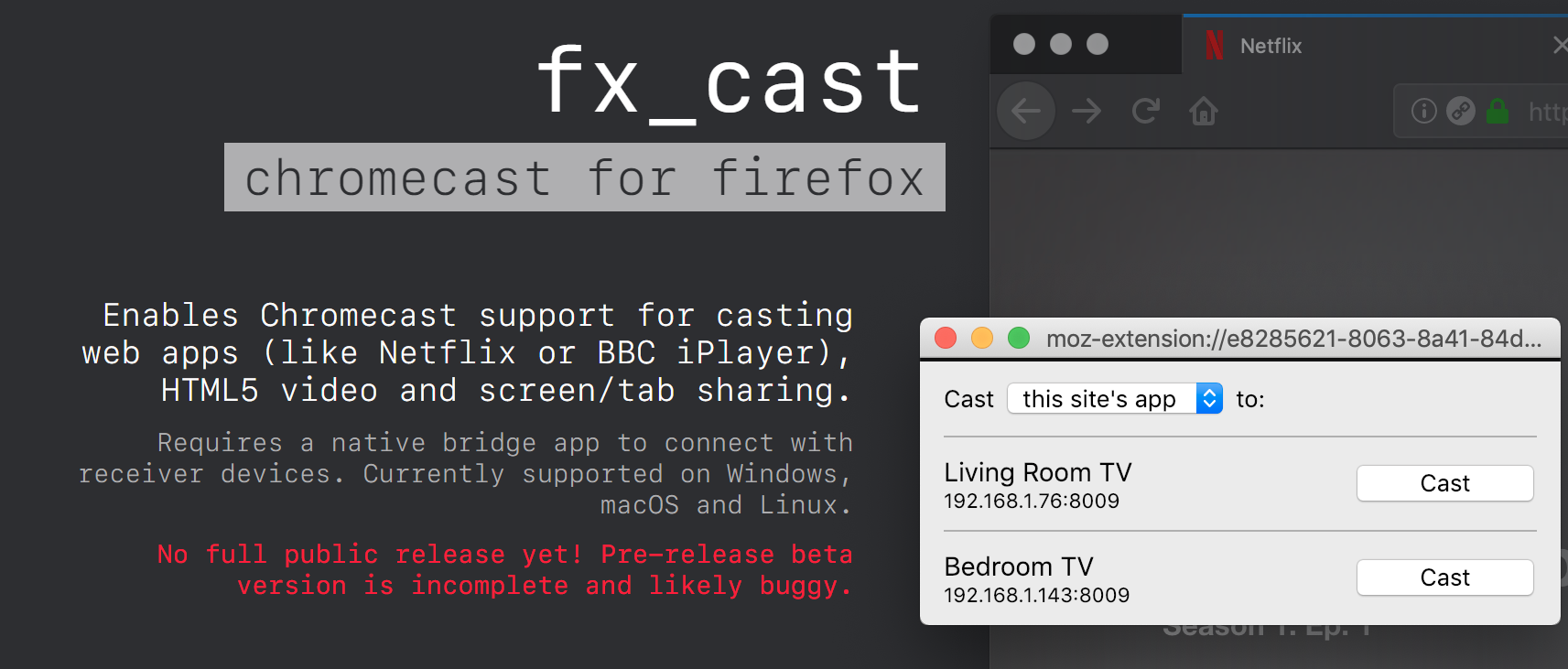

If there's anyone working on / interested in working on this now, it'd great to join up and cooperate on this. Looking at previous threads, I know there's a few people that have worked on this in the past and seem to have stopped. Casting via a cast button in an application/player will launch the respective receiver as normal. It will only work if the Chromecast can access that video. Casting will launch the default receiver. It uses native messaging, since there's no way to implement with extension APIs.

0 kommentar(er)

0 kommentar(er)